January 28, 2026

9 Cloud Cost Management Strategies Dominating 2026

14 min read

Cloud bills are not just a math problem anymore. They are the output of three things stacked on top of each other.

Architecture decisions. Usage behavior. Pricing complexity that changes faster than most teams update their docs.

So when someone says, “Our bill is high because we have too many resources”, yeah, sometimes. But in 2026, it is usually more subtle than that. You can be “rightsized” on compute and still bleed money through data movement, managed service features nobody uses, or a Kubernetes cluster that looks efficient on paper but is quietly over-reserving.

Also, 2026 is different because the cloud is different.

You have more managed services everywhere. More Kubernetes as the default substrate. More multi-cloud reality, even when people swear they are single cloud. And a lot more AI and analytics workloads.

Plus, the culture shifted. Teams experiment faster. Infrastructure is self-serve. A good engineer can spin up something expensive in minutes, with zero bad intent. Just moving fast.

Search intent-wise, if you are here, you probably want practical cloud cost management strategies. Not “turn off unused instances” as a lifestyle tip. You want tools, yes, but also a repeatable way of running this. A model.

So that is the promise of this guide.

By the end, you should have a clear path toward:

Predictable spend and fewer surprises

Faster decisions using unit cost

Sustainable optimization that does not depend on one hero doing quarterly cleanup

What “cloud cost management” actually means in 2026 (FinOps + engineering)

People mix up cloud cost management and cloud cost optimization. It matters.

Cloud cost optimization is the action. Rightsizing, commitments, storage tiering, fixing egress, scaling smarter. For instance, cost optimization on Azure involves cloud cost management strategies like rightsizing and scaling smarter.

Cloud cost management is the system that keeps optimization happening without drama. Ownership, measurement, governance, workflows, cadences.

In 2026, cost management usually means FinOps, but not as a finance project. More like an operating model that forces shared accountability across:

Finance (budgets, forecasting, reporting)

Engineering (architecture, performance, automation)

Product (unit economics, feature tradeoffs)

Procurement (commitments, negotiations, vendor management)

Traditional FinOps is often explained as three phases:

Inform: visibility, allocation, reporting, unit cost.

Optimize: commitments, rightsizing, scaling, reducing waste. This is where cloud cost optimization comes into play.

Operate: automation, governance, continuous improvement, cadence.

Most teams get stuck because they skip “Inform” and jump straight to “Optimize”, then argue for weeks because nobody trusts the numbers. Or they optimize once, save money, then the bill crawls back up because there was no system.

Common anti-patterns we keep seeing:

“Cost is finance’s job.” Finance can’t fix your NAT gateway egress bill.

Quarterly cleanups. Good for morale, bad for reality.

Optimization without measurement. You change things, maybe save money, but cannot prove it, cannot sustain it.

Okay, now we build the baseline.

Before you optimize: Baseline your spend in 30 to 60 minutes

This part may seem dull, but it's the quickest way to get things done. You can create a useful baseline in less than an hour if you keep it simple.

Here is a quick checklist:

Top services by cost (compute, storage, data, network, managed databases, Kubernetes, AI)

Top accounts, subscriptions, or projects

Top regions

Top teams or cost center,s if you have any allocation at all

Then segment spend into buckets that help you make decisions:

Production vs non prod

Shared platform vs product workloads

Variable vs fixed

Variable: autoscaling, usage-based analytics, egress, per-request services

Fixed: baseline compute, always on databases, reserved capacity

Now pick one north star KPI. Not ten.

A unit cost that maps to how your business actually works. Examples:

Cost per 1,000 requests

Cost per active customer

Cost per GB processed

Cost per training run

Cost per 1,000 inferences

The output of this baseline should be a short list of levers. The 80 20. Usually 2 to 4 areas where effort actually matters.

If you do nothing else, do this. Because everything below works better when you know where the spend really is.

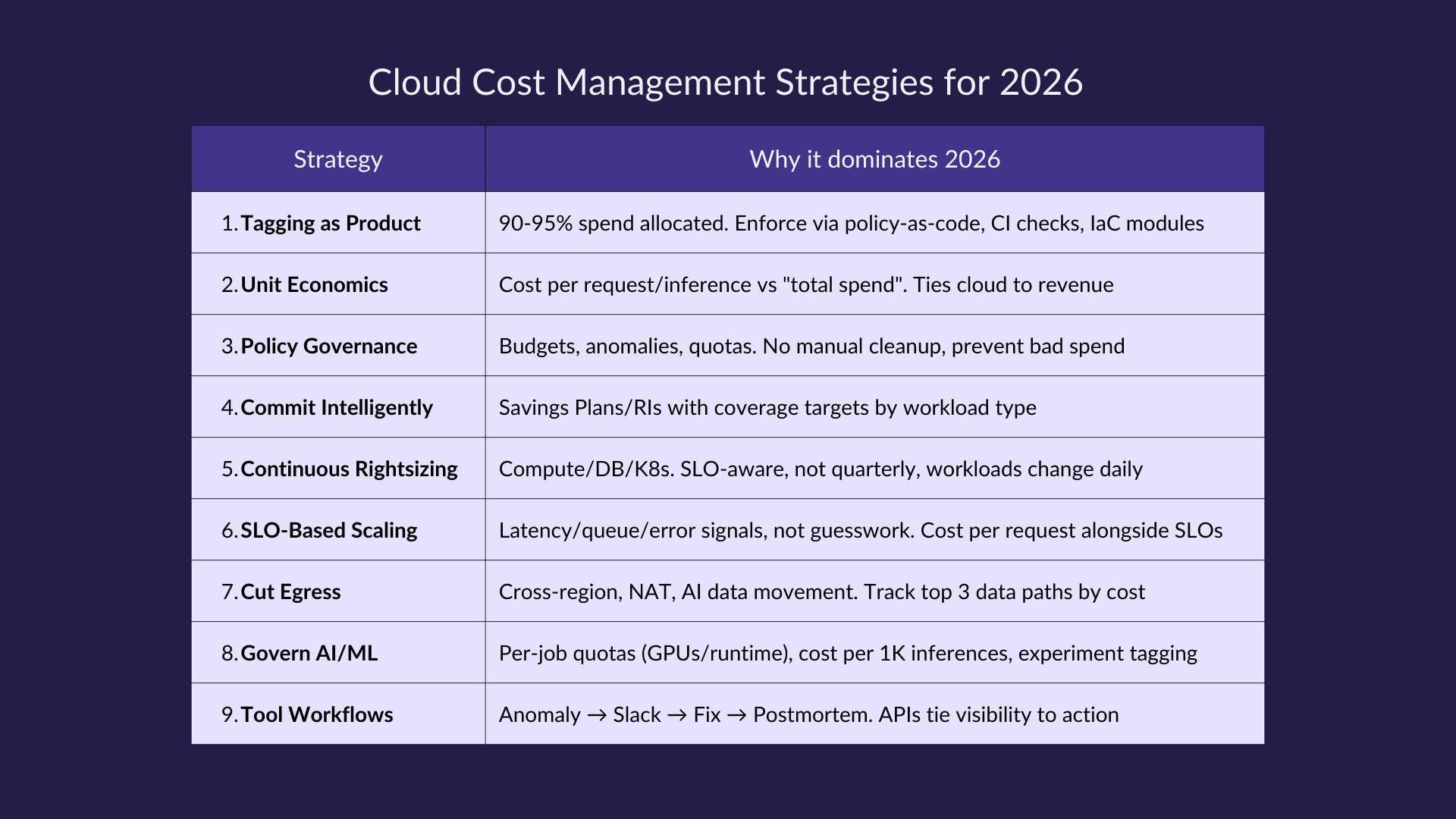

Cloud cost management strategies you need in 2026

These are the cloud cost management strategies that keep holding up across AWS, Azure, and GCP. Across Kubernetes, data platforms, and AI workloads. They are not tied to one vendor feature.

Implementation order matters, by the way.

Start with visibility and governance first. Then commitments and rightsizing. Then workload-aware automation. If you flip that order, you will still get savings, but it will feel chaotic and fragile.

1) Treat tagging as a product: enforce ownership, allocation, and chargeback/showback

Tagging is still the least glamorous aspect in cloud management, yet it's also the most powerful. By 2026, effective tagging will be essential because, without clean allocation, you cannot manage anything effectively. You cannot even argue productively.

A minimal tagging schema that works for most organizations includes:

owner(human or team alias)teamorcost_centerenvironment(prod, staging, dev)applicationorserviceproduct(if you have multiple products)Optional but useful:

data_sensitivityorcompliance

The key to successful tagging is not just the schema itself, but its enforcement.

Here are some ways teams can enforce tagging without relying on polite reminders:

Policy as code (cloud policies, org policies, admission controllers)

Infrastructure as Code guardrails (Terraform modules that require tags)

Deployment checks in CI (fail builds if tags are missing)

Auto tagging fallbacks (inherit tags from account, namespace, project, or cluster labels)

A rollout tip is to do showback first. This means you show teams their costs without immediately billing them internally. Implementing chargeback too early can create resistance and lead to people playing tagging games.

Also read: The Ultimate Guide to Chargeback Vs. Showback

What “good” looks like:

90 to 95 percent of the spend allocated

Allocation reviewed monthly

Unallocated spend treated like an incident

2) Make unit economics the default: cost per outcome beats “total spend”

Total spend is often a vanity number unless you're managing the cloud like a single monolith with no growth goals. However, most teams are not in such a situation.

Unit costs tie cloud spend to something real and measurable:

Requests served

Users active

Orders processed

GB ingested

Model inferences

Training runs completed

Choosing the right unit for measuring costs is part art and part politics. A good pattern to follow is:

One metric for executive reporting that is simple and stable.

A few deeper metrics for engineering that are more specific and diagnostic.

The implementation process involves:

Mapping cost dimensions to product telemetry (service name, namespace, project, workload ID).

Building dashboards that show unit cost trends over time.

Making it visible next to reliability metrics since cost is now a dimension of performance.

Then use unit costs to drive decisions such as:

Feature launches: what happens to cost per request if traffic doubles?

Scaling changes: is that lower latency worth the higher unit cost?

Pricing strategy: do we price below our unit cost during peak usage?

However, there are some pitfalls to avoid:

Vanity units that do not connect to revenue or user value.

Ignoring shared costs (clusters, observability, networking).

Not normalizing for seasonality, promotions, or product changes.

3) Replace “manual cleanup” with policy-based governance

Manual reviews do not scale. Not with self-serve infrastructure, dozens of teams, and workloads that change daily.

Governance does not mean slowing everything down. It means setting guardrails so most bad spend never happens.

Guardrails that usually pay off fast:

Budgets and budget alerts by team, product, and environment

Anomaly detection for spikes and strange patterns

Mandatory approvals for high cost resource classes

Region restrictions (especially for expensive or compliance sensitive regions)

Quota limits to prevent runaway experimentation

Policy as code examples that are common in 2026:

Block oversized instances in dev by default

Enforce lifecycle rules on storage buckets

Prevent public egress heavy configs as the default path

Require labels and requests/limits for Kubernetes workloads

You also need an exception process. Lightweight. Otherwise, people will route around you.

Something like:

Request with reason + expected duration + owner

Auto expiration

Quick approval channel

Logged for audit

How to measure impact:

Fewer cost incidents

Reduced idle spend

Faster detection to fix the time when anomalies happen

4) Commit intelligently: Savings Plans, RIs, Committed Use Discounts with coverage targets

Commitment discounts are still one of the biggest reliable levers for steady workloads. In 2026, that has not changed, even if the names differ across providers.

At a high level, most clouds offer:

Term-based discounts (commit to a term, pay less)

Committed use discounts (commit to usage level)

Reserved capacity models (reserve a specific capacity type)

How teams mess this up:

Committing before the baseline stabilizes

Over-committing because last month was high

Ignoring flexibility needs when the architecture is changing fast

A better approach is coverage targets by workload type:

Baseline production: high coverage target

Bursty services: lower coverage, rely on autoscaling, and spot where safe

Experimental workloads: no commitments, or very conservative

Operationalize it:

Monthly coverage review

Track utilization, not just purchase

Rebalance commitments as services move to containers, serverless, or managed platforms

Commitments should feel boring. That is a compliment.

Also read: AWS Savings Plans vs Reserved Instances: Choosing the Right Commitment for Your Cloud Costs

5) Automate rightsizing continuously (not quarterly): compute, databases, and data platforms

Rightsizing used to be a quarterly project. In 2026, that is basically broken.

Workloads change. Autoscaling changes utilization. New instance families show up. Managed services add features that change pricing. If you wait a quarter, you are always late.

Rightsizing priorities that usually matter most:

CPU and memory underutilization on compute

Over-provisioned managed databases (especially memory-heavy)

Oversized Kubernetes node pools and over-reserved requests

A safe approach looks like:

Start with recommendations, but do not blindly apply

Make SLO aware changes (latency, error rate, throughput)

Use safe rollbacks and canaries

Non-obvious wins people miss:

Storage tiering (hot to warm to archive)

IOPS and throughput tuning on managed disks and databases

Shortening retention where it is overkill, especially logs and metrics

Safety checklist you actually follow, not just write down:

Performance baseline before changes

Canary deployments for critical services

Change windows where it matters

Explicit rollback plan

Also read: Kubernetes Performance Mastery: Expert Guide to Cluster Rightsizing

6) Embrace workload-aware scaling: autoscale with SLOs, not guesswork

Scaling based on guesswork is expensive. Scaling based on SLOs is still hard, but it is the right direction.

Core idea: scale based on demand signals and service objectives.

Signals teams use successfully:

Latency targets

Queue depth

Throughput

Error rates

Concurrency

Backlog age for async systems

Tactics:

Horizontal autoscaling for steady demand variability

Scheduled scaling for predictable patterns (weekday peaks, batch windows)

Scale to zero for non-prod and event-driven workloads

Kubernetes-specific guidance that keeps coming up:

Rightsize requests and limits, because this is where waste hides

Use cluster autoscaler properly

Bin packing matters. Bad bin packing is basically paying rent for empty apartments

Avoid over-reserved capacity, especially when teams copy-paste resource requests

Tie scaling to cost:

Monitor cost per request or cost per inference alongside latency and error rate. If latency improves but unit cost doubles, that is a product decision, not an engineering win.

Common pitfall:

Autoscaling increases cost because thresholds are noisy or metrics are wrong. You scale up for nothing, then scale down too slowly. It feels like “we have autoscaling”, but it behaves like panic buying.

7) Cut egress and data movement costs: the “silent budget killer”

Egress is the silent budget killer because it does not show up in most architecture diagrams. But it shows up on the bill. Loudly.

In 2026, it is bigger because:

Multi-cloud architectures move data constantly

Data mesh patterns create more cross-domain movement

AI pipelines pull and push large datasets, checkpoints, embeddings, and logs

Top egress drivers to look for:

Cross-region traffic

NAT gateways for everything, even internal traffic

CDN misconfigurations

Inter AZ chatter between microservices and data stores

Replication patterns that were set once and never revisited

Optimization plays that work across clouds:

Keep compute close to the data. Obvious, but often ignored

Cache strategically (CDN, edge, internal caches)

Reduce chatty microservices, batch requests

Compress and aggregate transfers

Review replication and backup policies, especially cross-region

Vendor strategy matters too:

Negotiate bandwidth tiers when possible. And understand pricing boundaries between services, because “inside the cloud” is not always cheap. Sometimes, one managed service talking to another is billed as if they were strangers.

Measurement tip:

Track cost by data path, source to destination. Set budgets for egress heavy systems. If you cannot name the top three data paths by cost, you are flying blind.

Also read: Ingress vs. Egress: Why Data Egress Costs So Much

8) Govern AI/ML and analytics spend: budgets, quotas, and cost-aware experimentation

AI and analytics costs spike because experimentation is the point. People run things to learn. Great. But the bill does not care about curiosity.

In 2026, governing this category is a must.

Controls that work without killing velocity:

Per team budgets for AI and analytics

Per job quotas (max GPUs, max runtime, max cost)

Approval workflows for expensive runs

Mandatory tagging for experiments (owner, project, purpose, expected duration)

Optimization tactics for AI and ML:

Spot or preemptible where safe

Rightsize GPU and accelerator selection, avoid overkill

Checkpointing so failures do not restart from zero

Batching and caching for inference, especially for repetitive prompts

Analytics controls that pay off fast:

Workload management and query governance

Auto-suspend and auto-resume for warehouses

Separate dev and test from production warehouses

Guardrails on “scan the world” queries

Define success in units that match the work:

Cost per training run

Cost per 1,000 inferences

Cost per query, or cost per GB processed

If you only track “monthly warehouse spend”, you will always be surprised.

Also read: Cloud Cost Governance: Pillars, Tools and Best Practices

9) Standardize tool-driven workflows: from cost visibility to automated actions

Tools are not really your replacement for cloud cost strategies. But in 2026, you do need FinOps tools that can connect visibility to action.

Good FinOps tools usually covers:

Allocation and chargeback or showback

Anomaly detection

Forecasting

Recommendations

Automated remediation with approvals and audit trails

Tool stack categories most teams end up with:

Native cloud billing tools (AWS, Azure, GCP)

Third-party cloud cost management tools

Kubernetes cost tools

FinOps platforms that tie it together

Workflow examples that are worth standardizing:

Anomaly detected → Slack or ticket → owner assigned → fix → postmortem note

Recommendation generated → approval → automated change → verify SLO impact

Selection criteria that actually matter:

Multi-cloud support if you are serious about it

Allocation accuracy (including shared costs)

Kubernetes and containers support

Unit cost reporting

APIs and integrations (Jira, Slack, IaC pipelines)

Governance controls and auditability

Implementation tip:

Start with 2 to 3 workflows that pay back fast. Usually, anomaly alerts, idle cleanup, and commitment tracking. If you try to automate everything in week one, you will automate confusion.

How to choose cloud cost management tools (without buying shelfware)

Shelfware happens when you buy a platform before you have ownership, tagging, and basic workflows. Then the tool becomes an expensive dashboard that people stop opening.

Match tools to maturity:

Early stage: visibility, tagging support, simple budgets, and alerts

Growth: unit costs, governance, better allocation across teams and environments

Scale: automation, forecasting, commitment optimization, multi-cloud normalization

Non-negotiables for most teams:

Accurate allocation

Anomaly detection

Forecasting

Commitment tracking

Integrations with Slack and Jira

Ability to export data cleanly

If you run Kubernetes or shared platforms, push hard on this:

Can the tool allocate shared cluster and platform costs fairly? Can it handle namespaces, labels, node pools, and idle costs? If not, your most political cost conversations will stay political.

A proof of value checklist for trials:

Can it answer “who owns this cost” within minutes?

Can it show “what changed” when spend spikes?

Can it recommend an action that saves money safely, and help you execute it?

Procurement note:

Ask vendors for a transparent methodology. How do they allocate shared costs? How do they calculate savings? Can you export raw data? If it is a black box, it will become a trust problem later.

30-day cloud cost rollout plan

Week | Focus | Key Actions |

|---|---|---|

Week 1 | Baseline | - Run the baseline assessment |

Week 2 | Guardrails | - Implement budgets & anomaly alerts |

Week 3 | Commitments | - Draft commitment strategy |

Week 4 | Cadence | - Ship unit cost reporting |

Common mistakes that erase savings (and how to avoid them)

Optimizing without allocation

You save money, but cannot prove where it came from. Teams do not trust it. Savings fade.

Breaking performance to save cost

No SLOs, no rollback plan, no guardrails. You save dollars and lose customers. Not worth it.

Ignoring data movement

You optimize compute, meanwhile egress grows and quietly wins.

One time cleanup mentality

No automation, no cadence, no owners. The bill creeps back.

Tool first buying

A platform does not fix missing tags, missing ownership, or missing workflows. It just visualizes the mess.

Wrapping up: build a system, not a one-time cost-cutting sprint

The 9 cloud cost management strategies above are not meant to be a checklist you do once. They are meant to stack into a system.

Visibility and accountability (tagging, allocation, unit costs)

Governance that scales (guardrails, policies, exception flows)

Optimization that sticks (commitments, rightsizing, scaling)

Workload-aware engineering (egress, Kubernetes, AI, and analytics)

Tool-driven workflows that turn insight into action

If you want a simple place to start, pick the top 1 or 2 services by spend. Then implement allocation and guardrails first. The rest becomes easier, and honestly less political.

And if you want help setting up FinOps processes, selecting the right FinOps tools, and implementing measurable optimization safely across engineering and finance, Amnic can support cloud cost management and optimization end-to-end.

[Request a demo and speak to our team]

[Sign up for a no-cost 30-day trial]

[Check out our free resources on FinOps]

[Try Amnic AI Agents today]

FAQs

What is the difference between FinOps and cloud cost optimization?

FinOps is the operating model: shared accountability, reporting, governance, cadence. Cost optimization is the set of actions you take continuously inside that model.

How quickly can we reduce cloud costs without risking outages?

Most teams can find safe savings in 1 to 2 weeks with baseline visibility, anomaly alerts, dev scale to zero, storage lifecycle rules, and obvious idle cleanup. Riskier changes like database downsizing should be SLO driven and rolled out with canaries.

Do we need chargeback to manage cloud costs?

No. Start with showback. Make spend visible by team and product, build trust in allocation, and only move to chargeback when teams accept the numbers and the process feels fair.

What are the biggest cost drivers in 2026 cloud environments?

Common top drivers are managed databases, Kubernetes clusters with over-reserved capacity, data warehouses and lakehouses, AI training and inference, and egress or cross-region data movement.

How do we measure cloud cost efficiency properly?

Use unit cost. Cost per request, cost per customer, cost per inference, cost per GB processed. Pair it with reliability metrics so decisions balance cost and performance. For more insights on achieving this balance, refer to these best practices for mastering cloud reliability.

Which tools should we start with for cloud cost management?

Start with native billing tools plus one solution that supports allocation, anomaly detection, forecasting, and commitment tracking. If you run Kubernetes heavily, add a Kubernetes cost allocation tool early, because shared cluster costs get messy fast.

How often should we review cloud costs?

Weekly triage for anomalies and quick actions, monthly reviews for unit cost trends using tools that can save charts to track cost trends, commitment coverage, and major optimization opportunities. Quarterly only is too slow in 2026.

Recommended Articles

8 FinOps Tools for Cloud Cost Budgeting and Forecasting in 2026

5 FinOps Tools for Cost Allocation and Unit Economics [2026 Updated]