December 3, 2023

Running predictable and cost-optimized workloads on GKE

3 min read

It is now the new normal. Nearly everything available is an established trend for modernizing applications to run in software containers and managed by container clusters like Kubernetes.

Containerization has exploded as a practice in recent years and become a great way for development teams to move fast, deploy software efficiently, and operate at an unprecedented scale.

If you run Kubernetes yourself, you need to hire resources for running self-managed Kubernetes. And unless you have a certain minimum team size or are in a business that is closely adjacent to this, Kubernetes becomes a cost center. You could invest those resources in a function that actually drives your business instead.

Google Kubernetes Engine (GKE) brings you Kubernetes as a managed service hosted on Google's infrastructure. It is a fully managed compute platform with native support for containers, which means the underlying resources don’t have to be provisioned, and a container-optimized operating system is used to run workloads.

You can easily run containerized applications in a cloud environment as opposed to an individual virtual machine such as a compute engine. A container represents code packaged with all its dependencies.

GKE provides a user-friendly, reliable, and feature-rich platform. But understanding and optimizing GKE costs can be daunting, including complex native pricing estimators in addition to the factors that influence pricing.

In this article, we'll introduce and discuss some general goals to help guide your decisions with GKE. We will focus on the GKE pricing model and best practices for cost optimization. Some of these suggestions apply equally to running managed Kubernetes on other cloud platforms or in other contexts.

GKE Pricing Model

Let’s look at the different pricing models for GKE—keep in mind, the actual costs may vary depending on your usage and resource footprint. You can also get a ballpark GKE pricing estimate from their pricing calculator.

The GKE pricing information is sourced from the living pricing document of GKE.

Free Tier

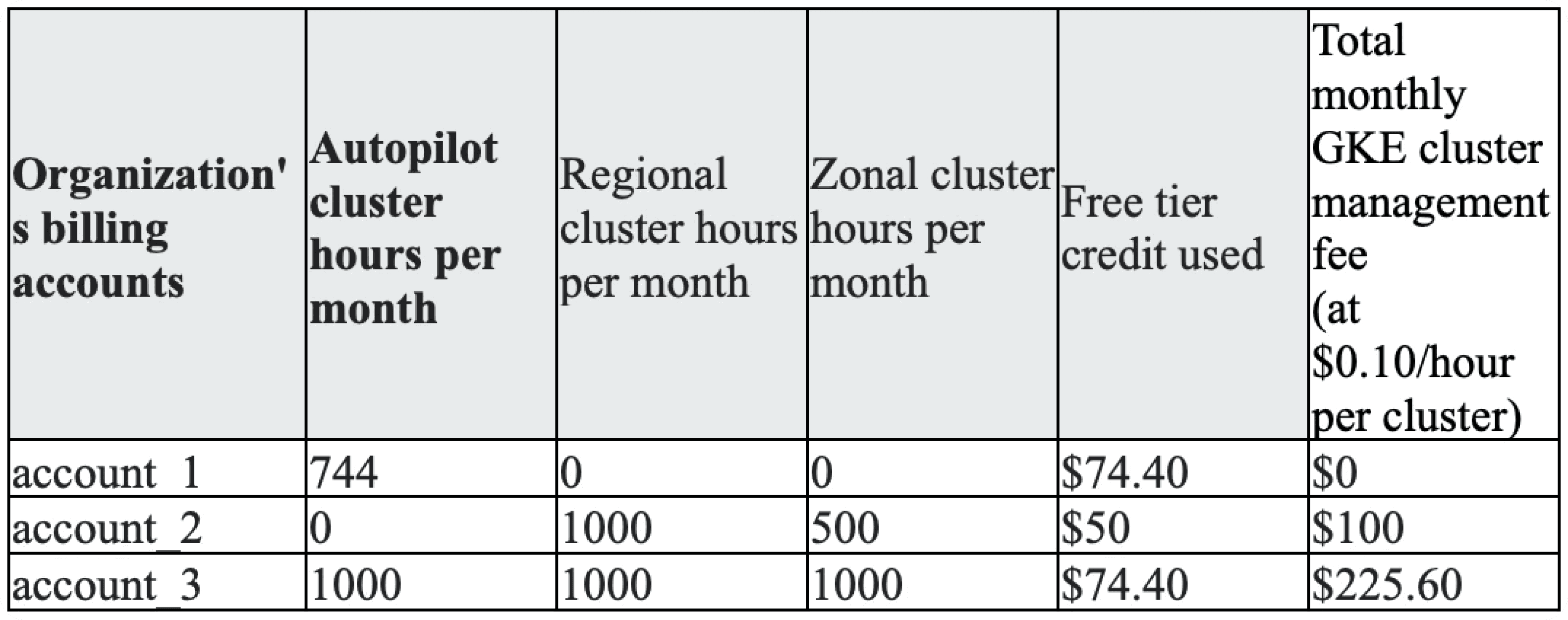

Google Kubernetes Engine offers a free tier that includes a 744-hour allocation of Autopilot cluster usage per month, which allows users to run a managed Kubernetes cluster.

Users also receive $74 in monthly credits per billing account to use towards GCP services, including GKE, for both zonal and Autopilot clusters.

GKE charges a fee to manage clusters, and the amount depends on the type of GCP account you have. If you're using a free trial account (account_1), there's no fee. For a paid GCP account (account_2), the fee is $100 per cluster per month. If you have a committed use account (account_3), the fee is $225 per cluster per month.

Free tier credits are limited and not sufficient for production-level workloads.

Autopilot Mode

In a traditional managed Kubernetes environment, most users overprovision clusters for scaling and do not 'bin pack' nodes efficiently. This all results in paying for infrastructure they aren’t using.

Interestingly, the default and recommended mode of GKE cluster operation in the cluster creation interface – GKE Autopilot – can save you a substantial amount on your Kubernetes total cost of ownership (TCO), including operational savings compared with traditional managed Kubernetes.

Autopilot pricing is a flat $0.10/hour fee per cluster, covering cluster management and infrastructure costs, including memory, CPU, and ephemeral storage. Users have the flexibility to start and stop clusters as needed, with no minimum duration.

This means that you only pay for the resources that you are using, and additional charges apply based on the CPU, memory, and ephemeral storage resources requested by your currently scheduled Pods.

Standard mode

Standard mode is priced at $0.10/hour per cluster, excluding Anthos clusters regardless of size or topology. Keep other GKE costs in mind — the underlying infrastructure and any additional Google Cloud services used alongside the cluster.

Let's use a basic example, say you want to deploy a 4-node GKE cluster in the US-Central1 region using the n1-standard-1 machine type.

The cost of this cluster is

Cost per node: $0.025 per hour

Number of nodes: 4 nodes

Cost per hour: $0.025 per hour/node * 4 nodes = $0.10 per hour

Annual cost: $0.10 per hour 24 hours/day 365 days/year = $876 per year

The actual cost of your cluster will vary based on the resource footprint.

Reservations and Committed Use Discounts

Reservations are most useful to get a discount when you are confident you will utlize the service in question for an extended period.

GKE Reservations have the following traits:

Committed use discounts automatically apply to GKE VMs.

No upfront cost for committed use discounts and reservations.

You cannot modify, resell, or cancel unneeded capacity.

Select only a certain number of vCPU and memory instead of the instance type size family.

Custom machine types with unique vCPU and memory configurations are an option.

CPUs and Local SSDs can be included in the commitment.

Memory-optimized, compute-optimized, or accelerator-optimized machine-type commitments are available for purchase.

Another factor, when you consider committed use discounts (a one- or three-year discounted pricing agreement) for your predictable workloads, it isn’t guaranteed you can launch an instance in that specific region or configuration. If you want that guarantee, you will need to purchase a zonal reservation. And this does not have a contract term, which means you can purchase one in the morning and terminate in the evening.

By combining the reservation with the commitment, you can get discounted reserved resources. Note, however, that reserved capacity is decoupled from reservations. You have the option to purchase reservations and reserve capacity separately, to operate on an as-needed basis.

Spot Instances

Spot VMs are more affordable than standard Compute Engine instances. To use Spot VMs in your clusters and node pools, you simply specify the use of Spot VMs and optionally set a maximum price.

The main advantages of using spot instances is that they have the highest discount of any discount pricing options, up to 60-91% discount compared to on-demand pricing.

But there are drawbacks, with the main concern being potential workload interruptions. There is no guarantee, no SLA, and often there are time limits.

If the Compute Engine needs to reclaim the resources used by Spot instance, the instance is terminated with typically 30 seconds to three-minute warning in GKE.

GKE Cost Optimization

The changing economics of the cloud can be intimidating for organizations when it comes to forecasting, budgeting, and cost management. Every cloud provider has zero incentive to offer great cost controls to paying customers, as it would mean users paying less—a situation no cloud provider wants, especially in the current macroeconomic climate.

Kubernetes, with new releases every few months, requires attention and FinOps expertise, especially in the case of GKE. Amnic offers hands-free Kubernetes visibility and cost optimization to minimize your GKE pricing.

Amnic delivers a cloud cost observability platform that helps measure and rightsize cloud costs continuously. It is agentless, secure and allows users to get started in five minutes at no cost.

Amnic provides a suite of features such as cost explorer, K8s visibility, custom dashboards, benchmarking, anomaly detection, alerts, K8s optimization and more. Businesses save 25-30% on their cloud costs, even on pre-optimized environments.

Amnic provides precise recommendations across network, storage and compute, based on your cloud infrastructure to identify high costs and industry best practices to reduce them. With Amnic, organizations can successfully build a roadmap towards lean cloud infrastructure and build a culture of cost optimization among their teams.

Visit www.amnic.com to learn more about how you can get started on your cloud cost optimization journey.